Ghidra on Docker accessed using X forwarding over SSH

Today I looked into getting Ghidra running on a remote machine in Docker and set it up to forward the display to my local machine over SSH.

To combat the impostor syndrome I have been feeling over my InfoSec knowledge, I decided to plan out talks in subjects I am both excited for and know a bit about.

First up is binary exploitation, specifically around buffer overflows.

What does this have to do with Ghidra, I imagine you asking.

Well, I'm glad you asked...

As the first step towards being able to teach/talk about buffer overflows I decided I'd quickly put together some code which demonstrates how the overflow techniques work. This way it'd be much easier to demonstrate and explain as I would have some executables made from simple source code which clearly show the issues I'm presenting and which functioned as expected.

After having created the first one, I decided that it might be useful if I had a way to reverse out the binary to check that it was structured the way I expected (this was mainly because i wanted to check that gcc wasn't optimising away one of the functions my exploit would rely on. In order to do this I would usually use ghidra. There are other reversing tools out there, but this is the one I'm currently most familiar with.

In order to do the development work for the buffer overflow material and have a place in which to demonstrate it, I'd spun myself up a new Ubuntu virtual machine with the intention of using Docker to run each of the different binaries. The plan was that each executable would be placed into a different docker container and set up so that I could nc into them and interact with the program. Ideally keeping it all nice and clean and allowing myself to quickly start and stop the instances.

The problem with this is that now everything is remote and it doesn't really work with me being able to use Ghidra from my normal laptop. I decided that I didn't want to install Ghidra directly on the VM as I was trying to keep it as clean and uncluttered as possible.

At this point, An Idea happened: why couldn't I also run Ghidra in a docker container?

I couldn't immediately see any reason this wouldn't work and, fortunately, I had no one around to tell me it was a bad idea. So I set to work.

Usually when I first start out with a fresh idea for something I want to Dockerise (not sure if that's a word but as this is my blog I'll use it anyway), I would start with a fairly blank image and iteratively add the packages I need as I work out how to get the software running. After a few tries, this is what I ended up with for my Dockerfile:

FROM ubuntu:20.10

ENV DEBIAN_FRONTEND noninteractive

RUN apt update && \

apt upgrade --auto-remove -y

RUN apt install -y \

curl \

wget \

unzip \

openjdk-11-jdk-headless && \

rm -rf /var/lib/apt/lists/*

RUN useradd -ms /bin/bash ghidra

WORKDIR /home/ghidra

RUN chown ghidra:ghidra /home/ghidra && chmod 770 /home/ghidra

USER ghidra:ghidra

RUN N=$(curl -s https://ghidra-sre.org/ | grep code.*PUBL | sed 's/^.*<code>\(.*\)<\/code>.*$/\1/'); \

HASH=$(echo ${N} | cut -d " " -f 1); \

FILE=$(echo ${N} | cut -d " " -f 2); \

curl -s -o ghidra.zip https://ghidra-sre.org/${FILE}; \

SHA=$(sha256sum ghidra.zip | cut -d " " -f 1); \

if [ "${SHA}" != "${HASH}" ]; then \

exit -1; \

fi; \

unzip ghidra.zip > /dev/null 2>&1; \

mv *PUBLIC ghidra; \

rm ghidra.zip; \

sed -i 's/bg/fg/' /home/ghidra/ghidra/ghidraRun

CMD ["/home/ghidra/ghidra/ghidraRun"]Luckily there weren't too many dependencies required. I had to do some messing around with parsing out the URL for the download from the Ghidra website and this file will probably break if the NSA choose to change the site too much. I was initially trying to use GitHub for the downloads but it looks like they only have source packages there and I wasn't feeling hardcore enough to build it from scratch as part of the process. I also had to change the script that's provided for starting Ghidra so that it didn't go into the background. This is because if it did background, the startup script would end and Docker would see the main process had finished and shut down the container.

This Dockerfile was then built with docker build --rm --tag ceisc/ghidra . from the directory with the Dockerfile in it.

This all seemed to work fine, but there was another hurdle to overcome:

AWTError - Can't connect to X11 window server using ':0.0' as the value of the DISPLAY variable.

It wasn't entirely unexpected that it wasn't going to be able to find a X11 server to connect to - I had, after all, done nothing to get this side of it to work.

My first step in resolving this was to make sure that I had X11 forwarding enabled on the SSH connection, so I added -X to the ssh command line. That turned out to be one of the simplest parts.

By default the X forwarding binds to the localhost address of the machine you're sshd into, which doesn't really lend itself to having it available in the Docker container. It would probably be possible to either 1) run docker with --net host to grant it access to the host's network, but that felt like too much access, or 2) change the SSHD setup to allow connections outside of localhost. Option 2 also felt wrong as my X server would be available from other parts of my network.

Luckily I stumbled over an excellent Stack Overflow answer which told me exactly what I needed to do:

iptables \

--table nat \

--insert PREROUTING \

--proto tcp \

--destination 172.17.0.1 \

--dport 6010 \

--jump DNAT \

--to-destination 127.0.0.1:6010

sysctl net.ipv4.conf.docker0.route_localnet=1The iptables command simply intercepts connections to port 6010 (the port DISPLAY=:10 maps to) for the host's docker IP and redirects it to the same port on localhost.

Once this was in place I just needed to do some magic around xauth to ensure that when the docker container tried to connect it would be allowed to do so. This was wrapped up in a bash script I put together to use whenever I needed to run ghidra:

#!/bin/bash

XAUTH=/home/ceisc/.ghidra/.docker.xauth

touch ${XAUTH}

xauth nlist $DISPLAY | sed -e 's/^..../ffff/' | xauth -f $XAUTH nmerge -

docker run --rm -d -e DISPLAY="172.17.0.1:10" -e XAUTHORITY=${XAUTH} -v ${XAUTH}:${XAUTH} ceisc/ghidra:latestThe launch script creates an xauth file in my home directory, grabs the access tokens and merges them into this file. It then launches my docker image passing through the DISPLAY environment variable so it knows where to connect to, the XAUTHORITY environment variable to tell it where the xauth file is, and also maps that file into the same location within the running container. It's also set to detach (-d) so we can return to our command prompt and to delete (--rm) the container once we're done with it.

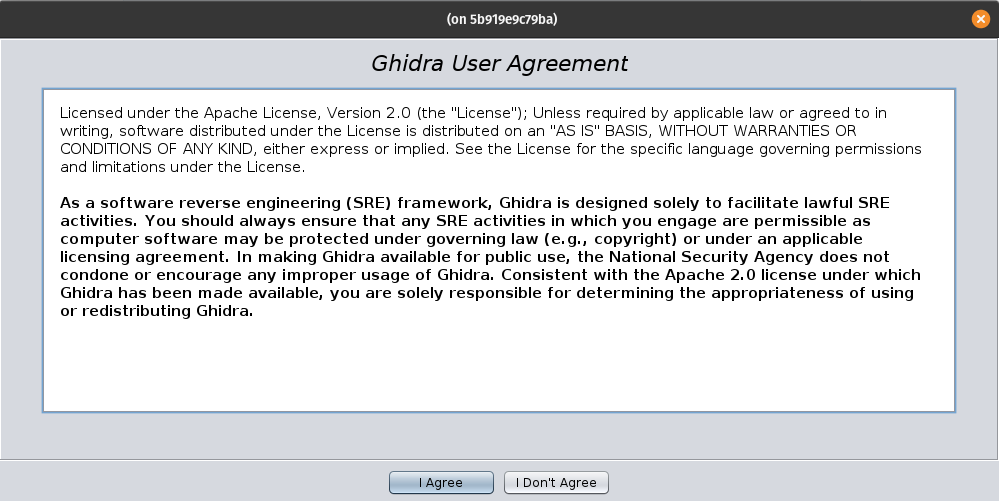

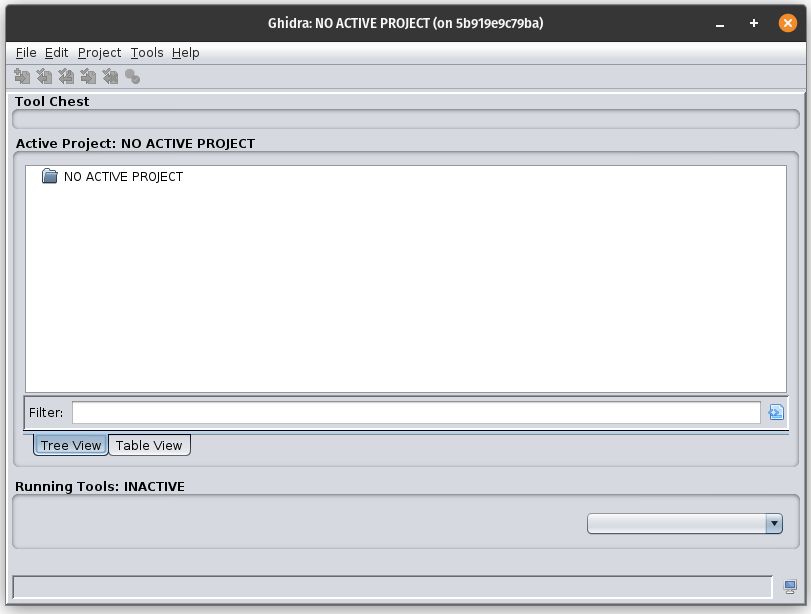

Running this script then did everything I was expecting and I was greeted with the very welcome sight of Ghidra firing up and displaying on my local machine:

All in all this really didn't take me that long to achieve (I have probably spent more time writing this article about it than it took to actually get running) and I'm very pleased with the result.

The only steps left to take in order to make this slightly better I would make the iptables and sysctl commands persist over machine restarts and I need to modify the start-up script so that it maps through the directories containing the binaries I need to decompile.